Gesture-based interaction with virtual 3D environments

| Led by: | Colin Fischer |

| Team: | Florian Politz |

| Year: | 2016 |

| Duration: | 2016 |

| Is Finished: | yes |

With the availability of increasingly powerful computing technology in the home/leisure sector, a race to develop affordable virtual reality (VR) and augmented reality hardware broke out on the technology market a few years ago, targeting potential markets, in particular, for realistic 3D content presentation (e.g. computer games). The core of this technology is the processing of three-dimensional information in the form of 2D stereo image pairs, which can be consumed via suitable output hardware (glasses/helmets). However, this principle can also be used elsewhere, for example for better exploration of or interaction with 3D spatial data.

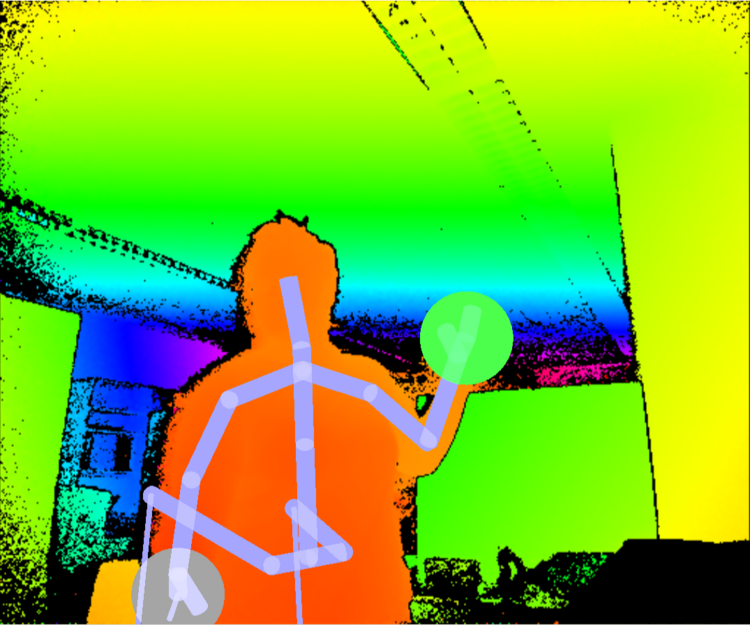

In the context of this master thesis, a VR environment (VR) was realized in which users are put into a scene captured by laser scanning and can move freely through body gestures in this and interact with the point cloud, so for example objects can be selected through a pointing motion to then delete them from the scene or to assign labels as part of a classification task. The realistic presentation facilitates the user's assessment of the real situation and thus supports his decisions.

To implement the VR environment, the game engine Unity 5 is used, which in turn uses the VR glasses Oculus Rift DK2 for the representation of the point cloud. Gesture-based control uses a Microsoft Kinect 2 to detect the user's previously trained body gestures in real-time, enabling completely contact-free interaction with the data.

For the environment, point cloud data sets from a mobile mapping system (laser scanning) are used. In this case, the realization was optimized for good scalability with the amount of data: the data organized in tiles is dynamically loaded into the main memory or removed therefrom on an event-driven basis. This may include information about pre-segmentation of the contained data within the VR environment, allowing interaction at the object level (rather than at individual points).